Some women experimented by making specific changes to their LinkedIn profiles: they added a mustache to their profile picture and changed the gender from female to male. The result: the social network reportedly gave them a boost in visibility. Is its algorithm sexist?

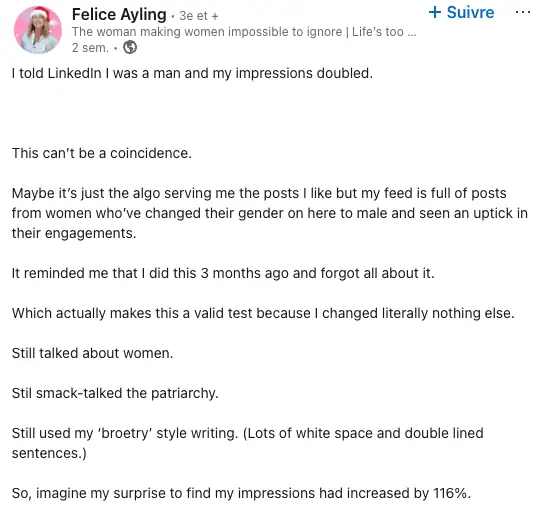

This story began on LinkedIn a few weeks ago. A user posted this status: “I indicated that I was a man and my reach doubled. It can’t be a coincidence. “

She claims that after making this change, the reach of her posts increased by 116%. Several people support her hypothesis and suggest that LinkedIn’s algorithm favors men over women.

On its blog, LinkedIn stated that its algorithm does not use demographic data to determine content visibility: “After testing the algorithm on this type of post, we confirm that its reach was not influenced by gender, pronouns, or any other demographic information.”

The biases of the algorithm

Since algorithms were designed by humans, the people behind LinkedIn’s likely transferred their way of perceiving the world, with their cognitive biases, explains Gaëlle Falcon, associate professor at the Faculty of Administrative Sciences at Laval University.

“Since the IT sector is predominantly male, they may value keywords like performance and productivity. These are attributes that men generally include in their profiles more often than women. It’s not intentional. But indirectly, women place more emphasis on teamwork and collaboration. Men rely on more aggressive terms to highlight themselves,” she said in an interview.

So, is LinkedIn’s algorithm sexist?

The team behind LinkedIn may well say that it does not rely on demographic information to build its algorithm, but we would need to witness it firsthand to make our own observations, says Nadia Serraiocco, associate professor at UQAM, specializing in identity and artificial intelligence.

“An algorithm is like a recipe. On LinkedIn, you could imagine that authority could be an ingredient in that recipe,” she explains.

If LinkedIn’s algorithm is trained with artificial intelligence, we need to determine what parameters we have assigned to the “authority” ingredient, according to Ms. Serraiocco . “Often, algorithms and artificial intelligence tend to perpetuate cognitive biases . For example, a figure of intellectual authority will be a man,” she mentions.

Could the change of photo or gender in the profile have had an impact? It’s quite uncertain, explains Ms. Serraiocco .

But the algorithm is sensitive to the vocabulary used, according to Nadia Serraiocco. “People make AI-generated posts and reply with AI. This is frustrating for some. However, the posts generate thousands of views,” she says.